Contents

Prologue

1. About this document

Part 1: Grasping the scales

1.1 Size and age of the universe

1.2 Age and structure of the Milky Way

1.3 Methuselah stars

1.4 The expanding universe

1.5 Our location in the universe

1.6 The universe as a living organism

Part 2: Drake’s equation

2.1 Aspects of Drake's equation

2.2 Number of stars

2.2.1 Rate of stars

2.2.2 Lifespan of stars

2.2.3 Habitability of red dwarf systems

2.2.4 Populations of stars

2.2.5 Peak population of MS stars

2.2.6 Generations of civilizations

2.3 Number of exoplanets

2.3.1 Earth analog

2.3.2 Habitable zone

2.3.3 Habitability outside the CHZ

2.4 Life on exoplanets

2.4.1 Abiogenesis

2.4.2 The problem of homochirality

2.4.3 Life is abundant

2.4.4 Selective destruction

2.5 Intelligent life

2.5.1 Defining intelligence

2.5.2 Intelligent life is unique

2.5.3 Possibility of communication

2.5.5 Timeline of intelligent life

2.6 The number L

2.6.1 Life expectancy

2.6.2 Extinction event

2.6.3 Our closest neighbors

2.7 Number of civilizations

Part 3: Expanding Drake’s equation

3.1 Exponential vs logistic growth

3.2 Statistical Drake’s equation

3.3 The normal distribution

3.4 Distribution of MS stars

3.5 Kardashev’s scale

3.6 Distribution of civilizations

3.7 ETIQ

3.8 Peak population

Part 4: Fermi’s paradox

4.1 About Fermi’s paradox

4.2 The horizon problem

4.3 A thought experiment

4.4 Information paradox

4.5 Technological event horizons

4.6 Entropy and invisibility

4.7 Cosmological exclusion principle

4.8 The mediocrity principle

4.9 Speciation vs colonization

Epilogue

5.1 So where are they?

5.2 The anthropic principle

5.3 Principle of non-intervention

5.4 Dyson spheres

5.5 UFO’s as psychic phenomena

5.6 To Alpha Centauri and beyond

Appendix

6.1 Grasping the scales once more…

Prologue

1. About this document

Part 1: Grasping the scales

1.1 Size and age of the universe

1.2 Age and structure of the Milky Way

1.3 Methuselah stars

1.4 The expanding universe

1.5 Our location in the universe

1.6 The universe as a living organism

Part 2: Drake’s equation

2.1 Aspects of Drake's equation

2.2 Number of stars

2.2.1 Rate of stars

2.2.2 Lifespan of stars

2.2.3 Habitability of red dwarf systems

2.2.4 Populations of stars

2.2.5 Peak population of MS stars

2.2.6 Generations of civilizations

2.3 Number of exoplanets

2.3.1 Earth analog

2.3.2 Habitable zone

2.3.3 Habitability outside the CHZ

2.4 Life on exoplanets

2.4.1 Abiogenesis

2.4.2 The problem of homochirality

2.4.3 Life is abundant

2.4.4 Selective destruction

2.5 Intelligent life

2.5.1 Defining intelligence

2.5.2 Intelligent life is unique

2.5.3 Possibility of communication

2.5.5 Timeline of intelligent life

2.6 The number L

2.6.1 Life expectancy

2.6.2 Extinction event

2.6.3 Our closest neighbors

2.7 Number of civilizations

Part 3: Expanding Drake’s equation

3.1 Exponential vs logistic growth

3.2 Statistical Drake’s equation

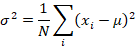

3.3 The normal distribution

3.4 Distribution of MS stars

3.5 Kardashev’s scale

3.6 Distribution of civilizations

3.7 ETIQ

3.8 Peak population

Part 4: Fermi’s paradox

4.1 About Fermi’s paradox

4.2 The horizon problem

4.3 A thought experiment

4.4 Information paradox

4.5 Technological event horizons

4.6 Entropy and invisibility

4.7 Cosmological exclusion principle

4.8 The mediocrity principle

4.9 Speciation vs colonization

Epilogue

5.1 So where are they?

5.2 The anthropic principle

5.3 Principle of non-intervention

5.4 Dyson spheres

5.5 UFO’s as psychic phenomena

5.6 To Alpha Centauri and beyond

Appendix

6.1 Grasping the scales once more…

1. About this document

Drake’s equation has been a great inspiration of mine. On one hand, it is the straightforward and simple way by which this equation estimates the possible number of extraterrestrial civilizations. On the other hand, it is the analysis of the parameters of the equation which makes one begin a journey into the universe.

It is estimated that there are 100 billion stars in the Milky Way, and that there are probably 100 billion galaxies in the universe. But even if the universe is full of galaxies, and the galaxies are full of stars, while most of the stars may have planets, on some of which life may have evolved, so that there could be a considerable number of other civilizations out there, the distances are so great that, in addition to our limited means of communication at the moment, the chance of finding and contacting an extraterrestrial civilization like our own is little.

This is not to say that life is rare in the universe. On the contrary abiogenesis suggests that it takes a few basic chemical elements for life to begin with. However the evolution of primitive life to the level of an intelligent species is another matter. Thus it is possible that, although life in the form of microbes is quite abundant in the universe, advanced life in the form of intelligent lifeforms may be rather sparse and unique, so that, given the great distances, the possibility of communication is remote.

This brings us to Fermi’s paradox: Even if the density of extraterrestrial civilizations in the universe is low (there may be a considerable number of other civilizations, but the distance between civilizations is also great), some of those civilizations should have evolved sufficiently enough to have discovered interstellar travel, and to have visited other worlds like ours. So where are they? Thus the paradox.

A possible answer to this paradox is I believe two-fold. On one hand, we have the fact that it will be difficult for two less advanced civilizations in interstellar travel and communication, like our own, to communicate or visit each other. On the other hand, a highly advanced civilization, capable of sophisticated communication, and knowing ways of interstellar travel which are unimaginable to us at present, will practically be undetectable by a less advanced civilization like ours.

Such a civilization will have passed beyond its own technological singularity, thus there will be a ‘technological event horizon’ between them and us, and they would probably have adopted ways and states of existence beyond our own brain capacity and ability of perception. Thus even if they came we wouldn’t make out them more than ‘lights in the sky,’ offering about them explanations which we are already familiar with (e.g. weather balloons). On the other hand, if they made themselves apparent to us, they would likely cause a culture shock, since we are basically ill-prepared for the contact, and our reaction would mostly be irrational and violent.

In such a sense we may say that our own brain and nature have effectively invented a protective mechanism for the event of such a contact. This is what I call the ‘cosmological exclusion principle.’ On one hand, two primitive civilizations like our own, who destroy their environment and make war, would probably annihilate each other if they come in contact. Thus nature has provided for great interstellar distances, so that primitive civilizations is difficult to meet each other. On the other hand, a sufficiently advanced civilization, leaving a small technological imprint on its surroundings, and having plentiful resources at its disposal in order to prevent war, will avoid to interfere with less advanced civilizations, either because of the lack of motive, or because of the consequences. This is what I call ‘principle of non- intervention.’

Thus the important question is not ‘Where are they?’ Instead it is ‘What if they had come?’ The immatureness of our civilization, which naturally stems from our own ignorance of the Cosmos, is responsible both for the blind faith in aliens, as if they were self- evident Gods, as well as for the axiomatic rejection of their existence, because of the threat they would pose to our own social establishment.

Here however I would like to make the following suggestion. UFO sightings are usually accompanied by unexplained premonitions and strange sentiments. Since we have never really captured aliens or one of their machines (despite conspiracy theories), our experience and knowledge of them is purely psychological and unconscious. This is why I believe that, after having accepted at least the possibility of their existence, we should treat aliens or their flying machines, instead of material entities or tangible objects, as purely psychic phenomena. The natural process by which a species is gradually prepared on a psychological level to come in contact with an alien species can be called ‘preparation.’ This may explain the increasing number of UFO ‘sightings’ which occur nowadays.

According to the anthropic principle, we are fined-tuned with the universe so that we are able not only to understand the universe, but also to understand why we understand. If, according to the aforementioned cosmological exclusion principle, there is some kind of pre- established arrangement of the distances between civilizations, so that their mutual destruction is avoided, then there may also be some kind of providence for their communication or contact, so that two civilizations will meet each other only when they are both sufficiently advanced. Therefore the fine-tuning on the physical level, can also exist on the psychological and mental level.

Perhaps this didn’t happen when the Europeans arrived in the New World, as the more advanced Europeans subjugated or exterminated the less advanced native Indians. But in this example, although it is instructive, the scale is much smaller and narrower. Throughout human history there hasn’t been any contact or conflict between a ‘modern civilization’ and ‘ape-men.’ If we ever discover ‘stone people’ on another planet, our intention will probably be to avoid them, since machines work much better and cheaper than slaves, while any damage caused to them or to their planet will be inversely proportional to the sophistication of our machinery, and to our ability of extracting resources.

Recent observations suggest that the maximum rate of star production in the universe took place quite early, about 5 billion years after the Big Bang. If the life expectancy of a G-type star like our Sun is approximately 10 billion years, then the maximum number of stars like our Sun in the universe is occurring right now, about 15 billion years after the Big Bang. If we give a hiatus of 5 billion years for intelligent life to appear on a G-type star, as long as it took us to appear on our own solar system, then we should expect the peak population to take place in the universe about 20 billion years after the Big Bang, 5 billion years from now. This might explain the problem of ‘cosmic silence,’ since the number of other civilizations out there will still be low. However it is also probable that very few civilizations have had the time to advance at a high level, already visiting and terraforming exoplanetary systems.

Yet there seems to be enough empty space available for all in the universe. If right now just a couple out of 100 habitable planets has intelligent life as we know it, there will still be 98 unoccupied planets available for each of those intelligent lifeforms in the future. Breakthrough Starshot is the latest project planning a journey to Alpha Centauri. In the near future we may also discover the first exoplanetary civilization like our own, having thus answered the everlasting question whether we are alone in the universe. But perhaps the most important question is how we treat life on our own planet, as right now we are going through a sixth mass extinction of species.

1.1 Size and age of the universe

Drake’s equation has been a great inspiration of mine. On one hand, it is the straightforward and simple way by which this equation estimates the possible number of extraterrestrial civilizations. On the other hand, it is the analysis of the parameters of the equation which makes one begin a journey into the universe.

It is estimated that there are 100 billion stars in the Milky Way, and that there are probably 100 billion galaxies in the universe. But even if the universe is full of galaxies, and the galaxies are full of stars, while most of the stars may have planets, on some of which life may have evolved, so that there could be a considerable number of other civilizations out there, the distances are so great that, in addition to our limited means of communication at the moment, the chance of finding and contacting an extraterrestrial civilization like our own is little.

This is not to say that life is rare in the universe. On the contrary abiogenesis suggests that it takes a few basic chemical elements for life to begin with. However the evolution of primitive life to the level of an intelligent species is another matter. Thus it is possible that, although life in the form of microbes is quite abundant in the universe, advanced life in the form of intelligent lifeforms may be rather sparse and unique, so that, given the great distances, the possibility of communication is remote.

This brings us to Fermi’s paradox: Even if the density of extraterrestrial civilizations in the universe is low (there may be a considerable number of other civilizations, but the distance between civilizations is also great), some of those civilizations should have evolved sufficiently enough to have discovered interstellar travel, and to have visited other worlds like ours. So where are they? Thus the paradox.

A possible answer to this paradox is I believe two-fold. On one hand, we have the fact that it will be difficult for two less advanced civilizations in interstellar travel and communication, like our own, to communicate or visit each other. On the other hand, a highly advanced civilization, capable of sophisticated communication, and knowing ways of interstellar travel which are unimaginable to us at present, will practically be undetectable by a less advanced civilization like ours.

Such a civilization will have passed beyond its own technological singularity, thus there will be a ‘technological event horizon’ between them and us, and they would probably have adopted ways and states of existence beyond our own brain capacity and ability of perception. Thus even if they came we wouldn’t make out them more than ‘lights in the sky,’ offering about them explanations which we are already familiar with (e.g. weather balloons). On the other hand, if they made themselves apparent to us, they would likely cause a culture shock, since we are basically ill-prepared for the contact, and our reaction would mostly be irrational and violent.

In such a sense we may say that our own brain and nature have effectively invented a protective mechanism for the event of such a contact. This is what I call the ‘cosmological exclusion principle.’ On one hand, two primitive civilizations like our own, who destroy their environment and make war, would probably annihilate each other if they come in contact. Thus nature has provided for great interstellar distances, so that primitive civilizations is difficult to meet each other. On the other hand, a sufficiently advanced civilization, leaving a small technological imprint on its surroundings, and having plentiful resources at its disposal in order to prevent war, will avoid to interfere with less advanced civilizations, either because of the lack of motive, or because of the consequences. This is what I call ‘principle of non- intervention.’

Thus the important question is not ‘Where are they?’ Instead it is ‘What if they had come?’ The immatureness of our civilization, which naturally stems from our own ignorance of the Cosmos, is responsible both for the blind faith in aliens, as if they were self- evident Gods, as well as for the axiomatic rejection of their existence, because of the threat they would pose to our own social establishment.

Here however I would like to make the following suggestion. UFO sightings are usually accompanied by unexplained premonitions and strange sentiments. Since we have never really captured aliens or one of their machines (despite conspiracy theories), our experience and knowledge of them is purely psychological and unconscious. This is why I believe that, after having accepted at least the possibility of their existence, we should treat aliens or their flying machines, instead of material entities or tangible objects, as purely psychic phenomena. The natural process by which a species is gradually prepared on a psychological level to come in contact with an alien species can be called ‘preparation.’ This may explain the increasing number of UFO ‘sightings’ which occur nowadays.

According to the anthropic principle, we are fined-tuned with the universe so that we are able not only to understand the universe, but also to understand why we understand. If, according to the aforementioned cosmological exclusion principle, there is some kind of pre- established arrangement of the distances between civilizations, so that their mutual destruction is avoided, then there may also be some kind of providence for their communication or contact, so that two civilizations will meet each other only when they are both sufficiently advanced. Therefore the fine-tuning on the physical level, can also exist on the psychological and mental level.

Perhaps this didn’t happen when the Europeans arrived in the New World, as the more advanced Europeans subjugated or exterminated the less advanced native Indians. But in this example, although it is instructive, the scale is much smaller and narrower. Throughout human history there hasn’t been any contact or conflict between a ‘modern civilization’ and ‘ape-men.’ If we ever discover ‘stone people’ on another planet, our intention will probably be to avoid them, since machines work much better and cheaper than slaves, while any damage caused to them or to their planet will be inversely proportional to the sophistication of our machinery, and to our ability of extracting resources.

Recent observations suggest that the maximum rate of star production in the universe took place quite early, about 5 billion years after the Big Bang. If the life expectancy of a G-type star like our Sun is approximately 10 billion years, then the maximum number of stars like our Sun in the universe is occurring right now, about 15 billion years after the Big Bang. If we give a hiatus of 5 billion years for intelligent life to appear on a G-type star, as long as it took us to appear on our own solar system, then we should expect the peak population to take place in the universe about 20 billion years after the Big Bang, 5 billion years from now. This might explain the problem of ‘cosmic silence,’ since the number of other civilizations out there will still be low. However it is also probable that very few civilizations have had the time to advance at a high level, already visiting and terraforming exoplanetary systems.

Yet there seems to be enough empty space available for all in the universe. If right now just a couple out of 100 habitable planets has intelligent life as we know it, there will still be 98 unoccupied planets available for each of those intelligent lifeforms in the future. Breakthrough Starshot is the latest project planning a journey to Alpha Centauri. In the near future we may also discover the first exoplanetary civilization like our own, having thus answered the everlasting question whether we are alone in the universe. But perhaps the most important question is how we treat life on our own planet, as right now we are going through a sixth mass extinction of species.

It is important to notice that the age of the Milky Way galaxy is approximately equal to the age of the universe (although it seems that the thin disk of the Milky Way formed about 5 billion years after the Big Bang). Apart from the implications for modern cosmology (e.g. the universe seems to have formed by accretion from a pre-existing distribution of matter, instead of by the Big Bang), such an observation makes it possible that civilizations much older, thus also much more advanced, than our own may exist out there- the Sun formed 5 billion years ago, while the first MS (main sequence) stars may have formed 5 billion years earlier (when the thin disk of the Milky Way formed). The paradox which rises is that if the existed they would have already visited us. But this isn’t necessarily true if advanced civilizations avoid contact with less advanced or primitive civilizations like our own (compared to them). But let’s take a look at the huge cosmological scales first.

Visualization of the observable universe

[https://en.wikipedia.org/wiki/Observable_universe]

The distance of 93 billion light years in the previous picture refers to the ‘comoving’ distance due to the expansion of spacetime since the Big Bang. The horizon of the observable universe is 13.8 billion light years, and it is the distance light has travelled since the Big Bang, 13.8 billion years ago. Thus from our own perspective we can see astronomical objects which are as far as 13.8 billion light years away, but not further on. This might change when the nature of dark energy is understood (since the stretching of spacetime is related to dark energy).

Thus the universe is enormous. And this could be one of many universes in the multiverse. What exactly is meant by ‘multiverse’ is uncertain. But if another universe is connected to ours through a ‘wormhole’ (or through an extra dimension), so that we could travel ‘instantaneously’ to some place in that universe, then it could be more convenient to search for extraterrestrial life in that universe instead of our own solar neighborhood. This is an example of how relative the meaning of distance might be, not to mention the ambiguity of the term ‘extraterrestrial’ lifeform.

Our own universe has hundreds of billions of galaxies, and is estimated that there are hundreds of billions of stars in our galaxy alone:

It has been said that counting the stars in the Universe is like trying to count the number of sand grains on a beach on Earth. We might do that by measuring the surface area of the beach, and determining the average depth of the sand layer. If we count the number of grains in a small representative volume of sand, by multiplication we can estimate the number of grains on the whole beach.

For the Universe, the galaxies are our small representative volumes, and there are something like 1011 to 1012 stars in our Galaxy, and there are perhaps something like 1011 or 1012 galaxies. With this simple calculation you get something like 1022 to 1024 stars in the Universe. This is only a rough number, as obviously not all galaxies are the same, just like on a beach the depth of sand will not be the same in different places.

No one would try to count stars individually, instead we measure integrated quantities like the number and luminosity of galaxies. ESA’s infrared space observatory Herschel has made an important contribution by ‘counting’ galaxies in the infrared, and measuring their luminosity in this range- something never before attempted.

[http://www.esa.int/Our_Activities/Space_Science/Herschel/How_many_stars_are_there_in_the_Universe]

Here I would like to mention a principle which I call ‘cosmic loneliness.’ It is relevant to the notion of ‘cosmic silence’ (the fact that we have never received radio signals from an alien civilization). Even if the universe is teaming with life, the scales are so enormous that communication as we know it is highly improbable. Radio signals do not travel further than a few light years, and any kind of information encoded in those signals cannot travel faster than light. Therefore all messages travelling in the universe right now will be rendered obsolete and meaningless after a while. As a consequence it seems that even if information in general or life in particular are common and plentiful in the universe, communication in the form of dialectic conversation was not the original purpose of the universe. This might also make us think if we earthlings communicate with each other because we really enjoy it, or simply because we find it necessary.

An intriguing and also surprising aspect is that the Milky Way, our own galaxy, has an age approximately equal to the age of the universe itself. The thin disk of the Milky Way however is somewhat younger, it was created 5 billion years after the Big Bang:

Night sky from a hypothetical planet within the Milky Way 10 billion years ago

The Milky Way began as one or several small over-densities in the mass distribution in the Universe shortly after the Big Bang. Some of these over-densities were the seeds of globular clusters in which the oldest remaining stars in what is now the Milky Way formed. These stars and clusters now comprise the stellar halo of the Milky Way. Within a few billion years of the birth of the first stars, the mass of the Milky Way was large enough so that it was spinning relatively quickly. Due to conservation of angular momentum, this led the gaseous interstellar medium to collapse from a roughly spheroidal shape to a disk. Therefore, later generations of stars formed in this spiral disk. Most of younger stars, including the Sun, are observed to be in the disk.

Since the first stars began to form, the Milky Way has grown through both galaxy mergers (particularly early in the Milky Way’s growth) and accretion of gas directly from the Galactic halo. The Milky Way is currently accreting material from two of its nearest satellite galaxies, the Large and Small Magellanic Clouds, through the Magellanic Stream. Properties of the Milky Way suggest it has undergone no mergers with large galaxies in the last 10 billion years; its neighbor the Andromeda Galaxy appears to have a more typical history shaped by more recent mergers with relatively large galaxies.

According to recent studies, the Milky Way as well as Andromeda lie in what in the galaxy color- magnitude diagram is known as the green valley, a region populated by galaxies in transition from the blue cloud (galaxies actively forming new stars) to the red sequence (galaxies that lack star formation). Star-formation activity in green valley galaxies is slowing as they run out of star-forming gas in the interstellar medium. In simulated galaxies with similar properties, star formation will typically have been extinguished within about five billion years from now, even accounting for the expected short-term increase in the rate of star formation due to the collision between both the Milky Way and the Andromeda Galaxy.

Several individual stars have been found in the Milky Way’s halo with measured ages very close to the 13.80-billion-year age of the Universe. Measurements of thin disk stars yield an estimate that the thin disk formed 8.8 ± 1.7 billion years ago. These measurements suggest there was a hiatus of almost 5 billion years between the formation of the galactic halo and the thin disk.

[https://en.wikipedia.org/wiki/Milky_Way#Formation]

It seems that conditions were not ripe for life to appear in the early universe. Still, besides the consequences with respect to the true structure of the universe, the age of the Milky Way makes it possible that some, even few, civilizations have had the time to evolve long before we did. For example, if at the moment the thin disk of the Milky Way formed some G-type stars like our Sun also formed, and it took 5 billion years for the first civilizations to appear on those star systems, as long as it took us to evolve in our solar system, then these civilizations will be 5 billion years more advanced than us, if they still exist.

It is intriguing that there are stars whose age is as old as the Big Bang, and that stars in the galactic halo may be slightly older. This may also suggest a different model for the formation of the universe (for example, in the same way galaxies form, by accretion). Besides this, old stars, although having lacked the necessary metallicity in order to have planetary systems, some of them may have harbored life very early in the history the universe. This is the oldest star currently known:

This is a Digitized Sky Survey image of the oldest star with a well-determined age in our galaxy. The aging star, cataloged as HD 140283, lies 190.1 light-years away.

A team of astronomers using NASA’s Hubble Space Telescope has taken an important step closer to finding the birth certificate of a star that’s been around for a very long time. The star could be as old as 14.5 billion years (plus or minus 0.8 billion years), which at first glance would make it older than the universe’s calculated age of about 13.8 billion years, an obvious dilemma.

This ‘Methuselah star,’ cataloged as HD 140283, has been known about for more than a century because of its fast motion across the sky. The high rate of motion is evidence that the star is simply a visitor to our stellar neighborhood. Its orbit carries it down through the plane of our galaxy from the ancient halo of stars that encircle the Milky Way, and will eventually slingshot back to the galactic halo.

This conclusion was bolstered by the 1950s astronomers who were able to measure a deficiency of heavier elements in the star as compared to other stars in our galactic neighborhood. The halo stars are among the first inhabitants of our galaxy and collectively represent an older population from the stars, like our sun, that formed later in the disk. This means that the star formed at a very early time before the universe was largely ‘polluted’ with heavier elements forged inside stars through nucleosynthesis. (The Methuselah star has an anemic 1/250th as much of the heavy element content of our sun and other stars in our solar neighborhood.)

This Methuselah star has seen many changes over its long life. It was likely born in a primeval dwarf galaxy. The dwarf galaxy eventually was gravitationally shredded and sucked in by the emerging Milky Way over 12 billion years ago. The star retains its elongated orbit from that cannibalism event. Therefore, it’s just passing through the solar neighborhood at a rocket-like speed of 800,000 miles per hour. It takes just 1,500 years to traverse a piece of sky with the angular width of the full Moon.

The star, which is at the very first stages of expanding into a red giant, can be seen with binoculars as a 7th-magnitude object in the constellation Libra. Hubble’s observational prowess was used to refine the distance to the star, which comes out to be 190.1 light-years. Once the true distance is known, an exact value for the star’s intrinsic brightness can be calculated. Knowing a star’s intrinsic brightness is a fundamental prerequisite to estimating its age. The new Hubble age estimates reduce the range of measurement uncertainty, so that the star’s age overlaps with the universe’s age.

[https://www.nasa.gov/mission_pages/hubble/science/hd140283.html]

Although the specific star originally formed in the halo of the Milky Way, therefore it might never have been suitable for life as we know it, there are other stars which have always been in our solar neighborhood, and which seem to be as old as the thin disk of the Milky Way (10 billion years old). We will mention such stars, as having been potentially habitable, later on.

As if the enormous distances which separate the stars were not enough, such distances grow bigger with time due to the expansion of the universe. On the small scale the cosmic expansion is not noticeable, but on the large scale it becomes significant. A measure of this cosmic expansion is Hubble’s constant:

This sequence of images taken with NASA’s Hubble Space Telescope chronicles the rhythmic changes in a rare class of variable star (located in the center of each image) in the spiral galaxy M100. This class of pulsating star is called a Cepheid Variable. The Cepheid in this Hubble picture doubles in brightness (24.5 to 25.3 apparent magnitude) over a period of 51.3 days.

[http://hubblesite.org/image/225/news_release/1994-49]

In the 1920s, Edwin Hubble, using the newly constructed 100" telescope at Mount Wilson Observatory, detected variable stars in several nebulae. Nebulae are diffuse objects whose nature was a topic of heated debate in the astronomical community: were they interstellar clouds in our own Milky Way galaxy, or whole galaxies outside our galaxy? This was a difficult question to answer because it is notoriously difficult to measure the distance to most astronomical bodies since there is no point of reference for comparison. Hubble’s discovery was revolutionary because these variable stars had a characteristic pattern resembling a class of stars called Cepheid variables. By knowing the luminosity of a source it is possible to measure the distance to that source by measuring how bright it appears to us: the dimmer it appears the farther away it is. Thus, by measuring the period of these stars (and hence their luminosity) and their apparent brightness, Hubble was able to show that these nebula were not clouds within our own Galaxy, but were external galaxies far beyond the edge of our own Galaxy.

Hubble’s second revolutionary discovery was based on comparing his measurements of the Cepheid-based galaxy distance determinations with measurements of the relative velocities of these galaxies. He showed that more distant galaxies were moving away from us more rapidly:

where v is the speed at which a galaxy moves away from us, and d is its distance. The constant of proportionality Ho is now called the Hubble constant. The common unit of velocity used to measure the speed of a galaxy is km/sec, while the most common unit of for measuring the distance to nearby galaxies is called the Megaparsec (Mpc) which is equal to 3.26 million light years. Thus the units of the Hubble constant are (km/sec)/Mpc.

This discovery marked the beginning of the modern age of cosmology. Today, Cepheid variables remain one of the best methods for measuring distances to galaxies and are vital to determining the expansion rate (the Hubble constant) and age of the universe.

The expansion or contraction of the universe depends on its content and past history. With enough matter, the expansion will slow or even become a contraction. On the other hand, dark energy drives the universe towards increasing rates of expansion. The current best estimate of expansion (the Hubble Constant) is 73.8 km/sec/Mpc.

[https://map.gsfc.nasa.gov/universe/uni_expansion.html]

The aspect of universal expansion is rather ambiguous. Presumably, at the horizon of the observable universe, 13.8 billion light years away, the galaxies will be receding from us with the speed of light, while beyond the observable horizon astronomical objects can be moving away from us at a speed even faster than light. This paradox is commonly resolved by the metric expansion of spacetime (that spacetime can expand faster than light, while photons do not travel faster than light). Still there is a paradox (a point in spacetime beyond the horizon of the observable universe can be receding faster than light). It has been observed that the universe accelerated its expansion about 5 billion years ago:

According to measurements, the universe’s expansion rate was decelerating until about 5 billion years ago due to the gravitational attraction of the matter content of the universe, after which time the expansion began accelerating. The source of this acceleration is currently unknown. Physicists have postulated the existence of dark energy, appearing as a cosmological constant in the simplest gravitational models as a way to explain the acceleration.

[https://en.wikipedia.org/wiki/Metric_expansion_of_space]

This observation coincides with another one which shows that star formation in the universe dropped dramatically during the same period. If this is true then in the near cosmic future the universe will become a vast desert of red dwarfs (stars much smaller than the Sun, so that they live much longer), since more massive stars like our Sun will have died out. An encouraging thought though is that there may be other universes to compensate for the loss of our own universe. Perhaps a better cosmological view will be necessary to accommodate the existence of other universes. If all spacetime was created by the ‘Big Bang,’ then this ‘Big Bang’ will account for all spacetime. But if, for example, we consider an accretion model for the universe (so that the whole universe is a local concentration of matter within a much wider region of spacetime), then the existence of other universes with various sizes and ages will be possible.

Our own Milky Way galaxy is an island within the vast ocean of interstellar dust. While the Milky Way is 100 thousand light years across, the Andromeda galaxy, which is the closest, is located 2.5 million light years away. This is where within the vast spiral structure of our galaxy the Sun and the Earth reside:

Artist’s concept of what astronomers now believe is the overall structure of the spiral arms in our Milky Way galaxy.

We live in an island of stars called the Milky Way, and many know that our Milky Way is a spiral galaxy. In fact, it’s a barred spiral galaxy, which means that our galaxy probably has just two major spiral arms, plus a central bar that astronomers are only now beginning to understand.

Our galaxy is about 100,000 light-years wide. We’re about 25,000 light-years from the center of the galaxy. It turns out we’re not located in one of the Milky Way’s two primary spiral arms. Instead, we’re located in a minor arm of the galaxy. Our local spiral arm is the Orion Arm. It’s between the Sagittarius and Perseus Arms of the Milky Way. It’s approximately 10,000 light years in length. Our sun, the Earth, and all the other planets in our solar system reside within this Orion Arm. We’re located close to the inner rim of this spiral arm, about halfway along its length.

The Orion Arm, or Orion Spur, has other names as well. It’s sometimes simply called the Local Arm, or the Orion-Cygnus Arm, or the Local Spur. It is named for the constellation Orion the Hunter, which is one of the most prominent constellations of the Northern Hemisphere in winter (Southern Hemisphere summer). Some of the brightest stars and most famous celestial objects of this constellation are neighbors of sorts to our sun, located within the Orion Arm. That’s why we see so many bright objects within the constellation Orion – because when we look at it, we’re looking into our own local spiral arm.

[http://earthsky.org/space/does-our-sun-reside-in-a-spiral-arm-of-the-milky-way-galaxy]

Some astronomers have suggested that there is a GHZ (galactic habitable zone) in the Milky way, in the same sense that there is a CHZ (circumstellar habitable zone) in our solar system. Thus as the Earth is located in the middle of the CHZ, in analogy the Sun will be located in the middle of the GHZ. Estimations about the width of such a zone vary. The main argument here is that if not all parts of the Milky Way are suitable for life as we know it, then we may concentrate the search for extraterrestrial life on the habitable areas:

In astrobiology and planetary astrophysics, the galactic habitable zone is the region of a galaxy in which life might most likely develop. More specifically, the concept of a galactic habitable zone incorporates various factors, such as metallicity and the rate of major catastrophes such as supernovae, to calculate which regions of the galaxy are more likely to form terrestrial planets, initially develop simple life, and provide a suitable environment for this life to evolve and advance.

In a paper published in 2001 by astrophysicists Gonzalez, Brownlee and Ward, it is stated that regions near the galactic halo would lack the heavier elements required to produce habitable terrestrial planets, thus creating an outward limit to the size of the galactic habitable zone. Being too close to the galactic center, however, would expose an otherwise habitable planet to numerous supernovae and other energetic cosmic events. Therefore, the authors established an inner boundary for the galactic habitable zone, located just outside the galactic bulge.

Currently the galactic habitable zone is often viewed as an annulus 4-10 kpc (≈13,000-33,000ly) from the galactic center. Galactic habitable zone theory has been criticized due to an inability to quantify accurately the factors making a region of a galaxy favorable for the emergence of life.

[https://en.wikipedia.org/wiki/Galactic_habitable_zone]

Whether the universe is expanding, contacting, or even static, may fundamentally depend on the way we perceive the things around us, either with telescopes or with our own eyes. When we think about the world and the universe, inside our minds no true motion takes place, except from the electric discharges which propagate on our neural network:

Computer simulated image of an area of space more than 50 million light years across, presenting a possible large-scale distribution of light sources in the universe.

[https://en.wikipedia.org/wiki/Observable_universe]

The existence of the universe as a whole and coherent entity is related to the cosmological principle, the aspect that the universe is homogeneous on the large scale. Some cosmologists have argued that homogeneity brakes down at very large scales. Such an argument is supported by the recent discoveries of immense structures at the edge of the observable universe, such as the Huge- Large Quasar Group, or the Hercules- Corona Borealis Great Wall. However the way matter is distributed in the universe is not so significant, as long as the different and distant parts stay together. How the two opposite parts of the universe are instantaneously connected to each other, so that the universe does not collapse, is a great mystery of modern science, because as we believe nothing can travel faster than light. But there seems to be out there a secret network of actions determined by currently unknown laws of physics, waiting to be discovered.

Perhaps this sort of ‘unconscious interconnectedness,’ binding the galaxies in the universe together, and making us able to perceive the word by forming the structure of our own nerve cells, is also responsible for hiding at the same time the deepest nature of the cosmic structure which produced the stars and our thoughts in the first place. Such a fine-tuning between ourselves and the universe also refers to the anthropic principle. But the anthropic principle poses a serious question. In our search for extraterrestrial intelligent lifeforms, which life qualifies as ‘intelligent?’ Which forms qualify as ‘living?’ Should they be just like us? If they use different ways of communication how can we detect them? If their structure consists of exotic matter, how can we perceive them? What exactly are we looking for with our telescopes, beyond perhaps the reflections of our own image?

[http://astronaut.com/whos-revisiting-drake-equation/]

These are some historical aspects about Drake’s equation, according to Wikipedia:

In September 1959, physicists Giuseppe Cocconi and Philip Morrison published an article in the journal Nature with the provocative title ‘Searching for Interstellar Communications.’ Cocconi and Morrison argued that radio telescopes had become sensitive enough to pick up transmissions that might be broadcast into space by civilizations orbiting other stars.

Such messages, they suggested, might be transmitted at a wavelength of 21 cm (1,420.4 MHz). This is the wavelength of radio emission by neutral hydrogen, the most common element in the universe, and they reasoned that other intelligences might see this as a logical landmark in the radio spectrum.

Two months later, Harvard University astronomy professor Harlow Shapley speculated on the number of inhabited planets in the universe, saying “The universe has 10 million, million, million suns similar to our own. One in a million has planets around it. Only one in a million, million, has the right combination of chemicals, temperature, water, days and nights to support planetary life as we know it. This calculation arrives at the estimated figure of 100 million worlds where life has been forged by evolution.”

Seven months after Cocconi and Morrison published their article, Drake made the first systematic search for signals from extraterrestrial intelligent beings. Using the 25 m dish of the National Radio Astronomy Observatory in Green Bank, West Virginia, Drake monitored two nearby Sun-like stars: Epsilon Eridani and Tau Ceti. In this project, which he called Project Ozma, he slowly scanned frequencies close to the 21 cm wavelength for six hours per day from April to July 1960. The project was well designed, inexpensive, and simple by today’s standards. It was also unsuccessful.

Soon thereafter, Drake hosted a ‘search for extraterrestrial intelligence’ meeting on detecting their radio signals. The meeting was held at the Green Bank facility in 1961. The ten attendees were conference organizer J. Peter Pearman, Frank Drake, Philip Morrison, businessman and radio amateur Dana Atchley, chemist Melvin Calvin, astronomer Su-Shu Huang, neuroscientist John C. Lilly, inventor Barney Oliver, astronomer Carl Sagan and radio-astronomer Otto Struve. The equation that bears Drake’s name arose out of his preparations for the meeting.

where

N = the number of civilizations in our galaxy with which communication might be possible.

R∗ = the average rate of star formation in our galaxy

fp = the fraction of those stars that have planets

ne = the average number of planets that can potentially support life per star that has planets

fl = the fraction of planets that could support life that actually develop life at some point

fi = the fraction of planets with life that actually go on to develop intelligent life (civilizations)

fc = the fraction of civilizations that develop a technology that releases detectable signs of their existence into space.

L = the length of time for which such civilizations release detectable signals into space.

The Drake equation amounts to a summary of the factors affecting the likelihood that we might detect radio-communication from intelligent extraterrestrial life. The last four parameters, fl, fi, fc, and L, are not known and are very difficult to estimate, with values ranging over many orders of magnitude. Therefore, the usefulness of the Drake equation is not in the solving, but rather in the contemplation of all the various concepts which scientists must incorporate when considering the question of life elsewhere, and gives the question of life elsewhere a basis for scientific analysis.

There is considerable disagreement on the values of these parameters, but the ‘educated guesses’ used by Drake and his colleagues in 1961 were:

R* = 1 star/y (1 star formed per year, on the average over the life of the galaxy).

fp = 0.2 to 0.5 (one fifth to one half of all stars formed will have planets).

ne = 1 to 5 (stars with planets will have between 1 and 5 planets capable of developing life).

fl = 1 (100% of these planets will develop life).

fi = 1 (100% of which will develop intelligent life).

fc = 0.1 to 0.2 (10–20% of which will be able to communicate).

L = 1,000 to 100,000,000 years (which will last somewhere between 1,000 and 100,000,000 years).

Inserting the above minimum numbers into the equation gives a minimum N of 20. Inserting the maximum numbers gives a maximum N of 50,000,000. Drake states that given the uncertainties, the original meeting concluded that N≈L, and there were probably between 1,000 and 100,000,000 civilizations in the Milky Way galaxy.

The astronomer Carl Sagan speculated that all of the terms, except for the lifetime of a civilization, are relatively high and the determining factor in whether there are large or small numbers of civilizations in the universe is the civilization lifetime, or in other words, the ability of technological civilizations to avoid self-destruction. In Sagan’s case, the Drake equation was a strong motivating factor for his interest in environmental issues and his efforts to warn against the dangers of nuclear warfare.

[https://en.wikipedia.org/wiki/Drake_equation]

There have been various estimates using Drake’s equation. Apart from any criticism, the equation may provide a good exercise for one who would like to delve deeper into the mysteries of the universe, and at the same time understand oneself better. One may even set all frequencies equal to 1, so that the determining factor will be L, the expected lifespan of a communicating intelligent civilization, given that they do want to communicate. This is why Carl Sagan pointed out the role of self-preservation, and environmental protection.

At the moment we know that we have been using detectable communications approximately for the last 100 years:

The first radio news program was broadcast August 31, 1920 by station 8MK in Detroit, Michigan, which survives today as all-news format station WWJ under ownership of the CBS network.

[https://en.wikipedia.org/wiki/History_of_radio]

Thus, in our case, L=100. Setting all other frequencies in Drake’s equation equal to 1, and assuming that the current production rate of new stars in the Milky Way is also approximately equal to 1, then the possible number of other civilizations like our own in the Milky Way will be N=100. If the Neolithic Revolution lasted about 10,000 years on our planet, then L=10,000, and there will be N=10,000 civilizations at the stage of the Neolithic Revolution right now in the Milky Way. If the Stone Age lasted 2.5 million years (since the first evidence of stone-tool use) on our planet, then L=2,500,000, and there will be an equal number N of ‘stone people’ on other planets in the Milky Way. And so on. This will be true if we set the frequencies equal to 1, so that we identify N with L.

In what follows each of the frequencies in Drake’s equation will be explored independently.

The number N* of stars in the Milky Way can be determined if we know the rate R* of new stars, and multiply the rate R* by the lifespan L* of a star, so that it will be

2.2.1 Rate of stars

It is estimated that the Milky Way churns out seven new stars per year:

By mapping patches of radioactive aluminum in the Milky Way, scientists could determine the number of stars that are born and die each year in our galaxy.

In an investigation smacking of forensic detective work, scientists have measured the rate of star death and rebirth in our galaxy by combing through the sparse remains of exploded stars from the last few million years.

The scientists used the European Space Agency’s INTEGRAL (International Gamma-Ray Astrophysics Laboratory) satellite to explore regions of the galaxy shining brightly from the radioactive decay of aluminum-26, an isotope of aluminum. This aluminum is produced in massive star and in their explosions, called supernovae, and it emits a telltale light signal in the gamma-ray energy range. A global spatial distribution model was used to convert the observed gamma-ray flux to a total amount of aluminum-26.

The team’s multi-year analysis revealed three key findings: The team confirmed that aluminum-26 is found primarily in star-forming regions throughout the galaxy; about once every 50 years a massive star will go supernova in our galaxy (yes, we are overdue); and each year our galaxy creates on average about seven new stars. These rates also imply that per year about three to four solar masses of interstellar gas are converted to stars. About ten billion years into its life, the Milky Way galaxy has now converted about 90 percent of its initial gas content into stars.

[http://www.nasa.gov/centers/goddard/news/topstory/2006/milkyway_seven.html]

But if there are about 7 stars born in the Milky each year, which comes about to 3-4 solar masses (so that a star in the Milky Way has an average mass of about 0.5 times the mass of our Sun), how many stars die at the same time? Roughly speaking, we may have one star dying in the Milky Way, for each new star which is born:

We usually talk of star formation in terms of the gas mass that is converted into stars each year. We call this the star formation rate. In the Milky Way right now, the star formation rate is about 3 solar masses per year (i.e. three times the mass of the Sun’s worth of star is produced each year). The stars formed can either be more or less massive than the Sun, though less massive stars are more numerous. So roughly if we assume that on average the stars formed have the same mass as the Sun, then the Milky Way produces about 3 new stars per year. People often approximate this by saying there is about 1 new star per year.

Now what about the rate at which stars die? In typical galaxies like the Milky Way, a massive star should end its life as a supernova about every 100 years. Less massive stars (like the Sun) end their lives as planetary nebulae, leading to the formation of white dwarfs. There are about one of these per year.

Therefore we get on average about one new star per year, and one star dying each year as a planetary nebula in the Milky Way. These rates are different in different types of galaxies, but you can say that this is roughly the average over all galaxies in the Universe. We estimate at about 100 billion the number of galaxies in the observable Universe, therefore there are about 100 billion stars being born and dying each year, which corresponds to about 275 million per day, in the whole observable Universe.

[http://curious.astro.cornell.edu/about-us/83-the-universe/stars-and-star-clusters/star-formation-and-molecular-clouds/400-how-many-stars-are-born-and-die-each-day-beginner]

The approximation of 1 star dying- 1 star born, roughly means that our Milky Way galaxy is reaching a stage of equilibrium, where currently the rate of new stars is approximately equal to zero, so that we are close to the peak population of stars. This may be fundamental for the appearance of life in star systems, because an equilibrium may create stable conditions for the appearance and maintenance of life.

2.2.2 Lifespan of stars

Our Sun is not an average star in the Milky Way, as its mass is considerably larger (twice as much or even bigger) than the average star’s. The average star in the Milky Way is in fact a low- mass red dwarf. Red dwarfs are much more numerous and they live much longer than our Sun. The less massive a star is, the longer it lives. However whether red dwarfs can sustain life is debatable.

The lifespan of a star is estimated as follows:

Representative lifetimes of stars as a function of their masses

Stellar evolution is the process by which a star changes over the course of time. Depending on the mass of the star, its lifetime can range from a few million years for the most massive to trillions of years for the least massive, which is considerably longer than the age of the universe. The table shows the lifetimes of stars as a function of their masses. All stars are born from collapsing clouds of gas and dust, often called nebulae or molecular clouds. Over the course of millions of years, these protostars settle down into a state of equilibrium, becoming what is known as a main-sequence star.

Nuclear fusion powers a star for most of its life. Initially the energy is generated by the fusion of hydrogen atoms at the core of the main-sequence star. Later, as the preponderance of atoms at the core becomes helium, stars like the Sun begin to fuse hydrogen along a spherical shell surrounding the core. This process causes the star to gradually grow in size, passing through the subgiant stage until it reaches the red giant phase. Stars with at least half the mass of the Sun can also begin to generate energy through the fusion of helium at their core, whereas more-massive stars can fuse heavier elements along a series of concentric shells. Once a star like the Sun has exhausted its nuclear fuel, its core collapses into a dense white dwarf and the outer layers are expelled as a planetary nebula. Stars with around ten or more times the mass of the Sun can explode in a supernova as their inert iron cores collapse into an extremely dense neutron star or black hole. Although the universe is not old enough for any of the smallest red dwarfs to have reached the end of their lives, stellar models suggest they will slowly become brighter and hotter before running out of hydrogen fuel and becoming low-mass white dwarfs.

[https://en.wikipedia.org/wiki/Stellar_evolution]

On average, main-sequence stars are known to follow an empirical mass-luminosity relationship. The luminosity (L) of the star is roughly proportional to the total mass (M) as the following power law:

The amount of fuel available for nuclear fusion is proportional to the mass of the star. Thus, the lifetime of a star on the main sequence can be estimated by comparing it to solar evolutionary models. The Sun has been a main-sequence star for about 4.5 billion years and it will become a red giant in 6.5 billion years, for a total main sequence lifetime of roughly 1010 years. Hence:

where M and L are the mass and luminosity of the star, respectively, M☉ is a solar mass, L☉ is the solar luminosity and τMS is the star’s estimated main sequence lifetime.

Although more massive stars have more fuel to burn and might intuitively be expected to last longer, they also radiate a proportionately greater amount with increased mass. This is required by the stellar equation of state; for a massive star to maintain equilibrium, the outward pressure of radiated energy generated in the core not only must but will rise to match the titanic inward gravitational pressure of its envelope. Thus, the most massive stars may remain on the main sequence for only a few million years, while stars with less than a tenth of a solar mass may last for over a trillion years.

[https://en.wikipedia.org/wiki/Main_sequence]

Thus this is a way to estimate the rate of new stars in the Milky Way. Assuming an average mass MAV for MS (main sequence) stars of 0.4M☉ (solar masses), the timespan τMS of an average MS star will be,

Therefore if N* is the total number of MS stars in the Milky Way, and we identify the lifespan L* of an average MS star with the time τMS, then the rate R* of new MS stars will be,

2.2.3 Habitability of red dwarf systems

Although red dwarfs are the most abundant and long-lived MS stars in the Milky Way, many of which are observed to have terrestrial planets in orbit, so that such planetary systems would be the most common, the habitability of planets orbiting red dwarfs is debated:

Proxima Centauri, the closest star to the Sun at 4.2ly, is a red dwarf

A red dwarf is a small and relatively cool star on the main sequence, either K or M spectral type. Red dwarfs range in mass from a low of 0.075 solar masses to about 0.50 and have a surface temperature of less than 4,000 K (5,778 K has the sun).

Red dwarfs are by far the most common type of star in the Milky Way, at least in the neighborhood of the Sun, but because of their low luminosity, individual red dwarfs cannot easily be observed. From Earth, not one is visible to the naked eye. Proxima Centauri, the nearest star to the Sun, is a red dwarf, as are twenty of the next thirty nearest stars. According to some estimates, red dwarfs make up three-quarters of the stars in the Milky Way.

Stellar models indicate that red dwarfs less than 0.35 solar masses are fully convective. Hence the helium produced by the thermonuclear fusion of hydrogen is constantly remixed throughout the star, avoiding a buildup at the core. Red dwarfs therefore develop very slowly, having a constant luminosity and spectral type for, in theory, some trillions of years, until their fuel is depleted. Because of the comparatively short age of the universe, no red dwarfs of advanced evolutionary stages exist.

All observed red dwarfs contain metals, which in astronomy are elements heavier than hydrogen and helium. The Big Bang model predicts that the first generation of stars should have only hydrogen, helium, and trace amounts of lithium. With their extreme lifespans, any red dwarfs that were part of that first generation should still exist today. There are several explanations for the missing population of metal-poor red dwarfs. The preferred explanation is that, without heavy elements, only large stars can form. Large stars rapidly burn out and explode as supernova, spewing heavy elements that then allow red dwarfs to form. Alternative explanations, such as metal-poor red dwarfs being dim and few in number, are considered less likely because they seemingly conflict with stellar evolution models.

Many red dwarfs are orbited by exoplanets but large Jupiter-sized planets are comparatively rare. Doppler surveys around a wide variety of stars indicate about 1 in 6 stars having twice the mass of the Sun are orbited by one or more Jupiter-sized planets, vs. 1 in 16 for Sun-like stars and only 1 in 50 for red dwarfs. On the other hand, microlensing surveys indicate that long-period Neptune-mass planets are found around 1 in 3 red dwarfs. Observations with HARPS further indicate 40% of red dwarfs have a ‘super-Earth’ class planet orbiting in the habitable zone where liquid water can exist on the surface of the planet.

Planetary habitability of red dwarf systems is subject to some debate. In spite of their great numbers and long lifespans, there are several factors which may make life difficult on planets around a red dwarf. First, planets in the habitable zone of a red dwarf would be so close to the parent star that they would likely be tidally locked. This would mean that one side would be in perpetual daylight and the other in eternal night. This could create enormous temperature variations from one side of the planet to the other. Such conditions would appear to make it difficult for forms of life similar to those on Earth to evolve. And it appears there is a great problem with the atmosphere of such tidally locked planets: the perpetual night zone would be cold enough to freeze the main gases of their atmospheres, leaving the daylight zone nude and dry. On the other hand, recent theories propose that either a thick atmosphere or planetary ocean could potentially circulate heat around such a planet.

Variability in stellar energy output may also have negative impacts on the development of life. Red dwarfs are often flare stars, which can emit gigantic flares, doubling their brightness in minutes. This variability may also make it difficult for life to develop and persist near a red dwarf. It may be possible for a planet orbiting close to a red dwarf to keep its atmosphere even if the star flares. However, more-recent research suggests that these stars may be the source of constant high-energy flares and very large magnetic fields, diminishing the possibility of life as we know it. Whether this is a peculiarity of the star under examination or a feature of the entire class remains to be determined.

[https://en.wikipedia.org/wiki/Red_dwarf]

Perhaps life could exist on a planet orbiting a red dwarf under special conditions. For example, such lifeforms may live underground most of the time. But adaptations which we commonly attribute to intelligence (e.g. living on the surface of a planet may be necessary for the evolution of bipedalism) may not be available. Furthermore, despite the intense flare activity of red dwarfs, their energy output is much lower than that of a Sun-like star, so that even if some of those lifeforms have evolved enough to have become complex, the energy shortage may prevent them from advancing further on. Thus a form of evolutionary ‘dwarfism,’ synonymous to the nature of a red-dwarf star, could be expected forever.

2.2.4 Populations of stars

Relatively recent observations show that the first stars appeared in the universe very early:

Results from NASA’s Wilkinson Microwave Anisotropy Probe (WMAP) released in February 2003 show that the first stars formed when the universe was only about 200 million years old. Observations by WMAP also revealed that the universe is currently about 13.7 billion years old. So it was very early in the time after the Big Bang explosion that stars formed.

Observations reveal that tiny clumps of matter formed in the baby universe; to WMAP, these clumps are seen as tiny temperatures differences of less than one-millionth of a degree. Gravity then pulled in more matter from areas of lower density and the clumps grew. After about 200 million years of this clumping, there was enough matter in one place that the temperature got high enough for nuclear fusion to begin - providing the engine for stars to glow.

[http://starchild.gsfc.nasa.gov/docs/StarChild/questions/question55.html]

However, this first stars were very short-lived:

The incredibly bright and old CR7 galaxy, captured here in an artist’s impression, seems to shelter some of the universe’s earliest stars. This is the first time this type of star has ever been identified.

The first stars to condense after the Big Bang, called Population III stars, would have been up to a 1,000 times larger than the sun. These Population III stars would have been short-lived, exploding as supernovas after just 2 million years of blazing life, releasing the elements they created. Later stars could form from those remnants and forge even heavier elements.

[http://www.space.com/29685-bright-galaxy-first-stars-universe.html]

Stars can be divided into three groups, according to the population which they belong to:

Population I (or Generation III), or metal-rich stars, are young stars with the highest metallicity out of all three populations. The Earth’s Sun is an example of a metal-rich star. These are common in the spiral arms of the Milky Way galaxy.

Generally, the youngest stars, the extreme population I, are found farther toward the center of a galaxy, and intermediate population I stars are farther out. The Sun is an intermediate population I star. Population I stars have regular elliptical orbits of the galactic center, with a low relative velocity.

Between the intermediate population I and the population II stars comes the intermediary disc population.

Population II (or Generation II), or metal-poor stars, are those with relatively little metal. Intermediate population II stars are common in the bulge near the center of our galaxy, whereas population II stars found in the galactic halo are older and thus more metal-poor. Globular clusters also contain high numbers of population II stars.

Population III (or Generation I), or extremely metal-poor stars (EMP), are a hypothetical population of extremely massive and hot stars with virtually no metals, except possibly for intermixing ejecta from other nearby Pop III supernovae. Their existence is inferred from cosmology, but they have not yet been observed directly.

It was hypothesized that the high metallicity of population I stars makes them more likely to possess planetary systems than the other two populations, because planets, particularly terrestrial planets, are thought to be formed by the accretion of metals. However, observations of the Kepler data-set have found smaller planets around stars with a range of metallicities, while only larger, potential gas giant planets are concentrated around stars with relatively higher metallicity- a finding that has implications for theories of gas giant formation.

[https://en.wikipedia.org/wiki/Stellar_population]

Since the earlier a star formed in the universe the poorer in metals it might be, the role of metallicity is important in determining whether a metal-poor star can support a planetary system, and if such a planetary system is suitable for life:

This image shows the critical metallicity for Earth-like planet formation, expressed as iron abundance relative to that of the Sun, as a function of distance (r) from the host star.

In new research, scientists have attempted to determine the precise conditions necessary for planets to form in a star system. Jarrett Johnson and Hui Li of Los Alamos National Laboratory assert that observations increasingly suggest that planet formation takes place in star systems with higher metallicities.

Astronomers use the term ‘metallicity’ in reference to elements heavier than hydrogen and helium, such as oxygen, silicon, and iron. In the ‘core accretion’ model of planetary formation, a rocky core gradually forms when dust grains that make up the disk of material that surrounds a young star bang into each other to create small rocks known as ‘planetesimals.’

Citing this model, Johnson and Li stress that heavier elements are necessary to form the dust grains and planetesimals which build planetary cores. Additionally, evidence suggests that the circumstellar disks of dust that surround young stars don’t survive as long when the stars have lower metallicities. The most likely reason for this shorter lifespan is that the light from the star causes clouds of dust to evaporate. Johnson and Li further state that disks with higher metallicity tend to form a greater number of high mass giant planets.

In order to obtain estimates of the critical metallicity necessary for planet formation, Johnson and Li compared the lifetime of the disk and the length of time required for dust grains in the disk to settle. Since the settling time for dust grains depends on the density and temperature of the disk, which are related to the distance from the host star, the critical metallicity is also a function of distance from the host star.

The team found that the formation of planetesimals can only take place once a minimum metallicity is reached in a proto-stellar disk. Since the earliest stars that formed in the universe (Population III stars) do not have the required metallicity to host planets, it is believed that the supernova explosions from such stars helped enrich subsequent (Population II) stars, some of which may still be in existence and could host planets.

Johnson and Li also note that the formation of Earth-like planets is not itself a sufficient prerequisite for life to take hold, stating that early galaxies contained numerous supernovae and black holes- both strong sources of radiation that would threaten life. Given the hostile conditions in the early universe, it is expected that conditions suitable for life were only present after early galaxy formation.

[http://www.astrobio.net/news-exclusive/when-stellar-metallicity-sparks-planet-formation/]

2.2.5 Peak population of MS stars

The aspect that the vast majority of MS stars are red dwarfs can be interpreted as an indication that the Milky Way has exhausted most of its interstellar gas reserves, so that most of the stars it produces are low mass stars (red dwarfs). This is also supported by the following observation:

Diagram above shows how star production has decreased over billions of years. The new results indicate that, measured by mass, the production rate of stars has dropped by 97 percent since its peak 11 billion years ago.

For years, scientists were confused by the disparity between the number of stars we can observe and the number of stars we know should have been created by the universe. Numerous studies have each looked at specific periods in our Universe’s history in order to assess star formation, however since multiple methods were used, it was fairly difficult to aggregate and establish a comparative conclusion.

A team of international researchers decided to run a complete survey from the very dawn of the first stars using three telescopes- the UK Infrared Telescope and the Subaru Telescope, both in Hawaii, and Chile’s Very Large Telescope. Snapshots were taken of the look of the Universe at various instances in time when it was 2, 4, 6 and 9 billion years old- that’s 10 times as large as any previous similar study. The astronomers concluded that the rate of formation of new stars in the Universe is now only 1/30th of its peak.

“You might say that the universe has been suffering from a long, serious ‘crisis:’ cosmic GDP output is now only 3 percent of what it used to be at the peak in star production!” said David Sobral of the University of Leiden in the Netherlands.

“If the measured decline continues, then no more than 5 percent more stars will form over the remaining history of the cosmos, even if we wait forever. The research suggests that we live in a universe dominated by old stars.”

According to the current accepted models of the Universe’s evolution, stars first began to form some 13.4 billion years ago, or 300 million years after the Big Bang. Now, these ancient stars were nothing like those commonly found throughout the cosmos. These were like titans with respect to Olympus’ gods- standing at hundreds of times more massive than our sun. Alas, the giants’ life would’ve been short lived, quickly exhausting their fuel, they had only one million years or so worth of time. Lighter stars, like our own, in contrast can shine long and bright for billions of years.

It’s from these huge, first stars that other smaller, more long-lived stars form as the cosmic dust was recycled. Our Sun, for example, is thought to be a third generation star, and has a typical mass, or weight, by today’s standards. To identify star formations, the astronomers searched for alpha particles emitted by hydrogen atoms, appearing as a bright red light.

“Half of these were born in the ‘boom’ that took place between 11 and 9 billion years ago and it took more than five times as long to produce the rest,” Sobral said. “The future may seem rather dark, but we’re actually quite lucky to be living in a healthy, star-forming galaxy which is going to be a strong contributor to the new stars that will form.

[http://www.zmescience.com/space/universe-stop-making-news-stars-04343/]

The previous observation may seem discouraging, but in fact the opposite could be true: If the maximum rate of stars occurred approximately 4-5 billion years after the Big Bang, and if the lifespan of a star like our Sun is roughly 10-12 billion years, then the peak population of Sun-like stars (those with a mass about equal to the mass of the Sun) will occur about 15-16 billion years after the Big Bang, or a couple of billion years, give or take, from now. If we also assume a time difference of about 5 billion years for an intelligent civilization to appear in a Sun-like star system (as long as it took us), then the peak population of civilizations in the Milky Way will occur about 20 billion years after the Big Bang, or about 5-6 billion years from now. If this true then the problem of ‘cosmic silence’ (that it seems we are alone in the universe) could find an explanation, since a small fraction of civilizations will have already emerged.

2.2.6 Generations of civilizations

Although relatively early in the history of the universe conditions may not have been ripe for the appearance of life in planetary systems, while planetary systems may have been sparse because of the low metallicity of the parent stars, in some Population II stars life may have had the opportunity to appear and evolve. This is a related article:

“How long has our galaxy harbored Class F, G, and K stars with potentially life-sustaining terrestrial planets? I’ve pondered this question for a while and tried to get answers from the web to no avail.

However, it just occurred to me that our sun, and other main sequence stars of 0.3 to 8 solar masses, will eventually become Red Giants. Therefore, the Red Giants we see today (e.g. Aldebaran, Arcturus, Gamma Cruces, etc.) used to be sun-like and, as such, probably had habitable zones for billions of years. Moreover, Aldebaran appears to me to be a Population I Star, meaning that it has a metallicity comparable to our sun’s; a trait that is associated with the development of terrestrial planets.

Since it would take another 5 billion years for our sun to become a Red Giant, we can extrapolate that Aldebaran et al may have possessed potentially life-bearing planets as early as 5 billion years ago, most likely more.

[https://www.quora.com/How-long-has-our-galaxy-harbored-Class-F-G-and-K-stars-with-potentially-life-sustaining-terrestrial-planets]

This is the oldest planetary system which has ever been found (as of 27 January 2015):

This is an artistic impression of the Kepler-444 planetary system

[http://www.sci-news.com/astronomy/science-kepler-444-five-exoplanets-11-billion-year-old-star-02437.html]

Kepler-444 is a star system which consists of an orange main sequence primary star of spectral type K0, and a pair of M-dwarf stars, 116 light-years away from Earth in the constellation Lyra. The system is approximately 11.2 billion years old, more than 80% of the age of the universe, whereas the Sun is only 4.6 billion years old.

On 27 January 2015, the Kepler spacecraft is reported to have confirmed the detection of five sub-Earth-sized rocky exoplanets orbiting the primary star. Preliminary results of the planetary system around Kepler-444 were first announced at the second Kepler science conference in 2013. The original research on Kepler-444 was published in The Astrophysical Journal on 27 January 2015.

All five rocky exoplanets are smaller than the size of Venus (but bigger than Mercury) and each of the exoplanets completes an orbit around the host star in less than 10 days. Even the furthest planet, Kepler-444f, still orbits closer to the star than Mercury is to the Sun. According to NASA, no life as we know it could exist on these hot exoplanets, due to their close orbital distances to the host star.

[https://en.wikipedia.org/wiki/Kepler-444]

As far as Aldebaran is concerned, it is classified as a type K5 III star, which indicates it is an orange-hued giant star that has evolved off the main sequence. It is also possible that the star is orbited by a hot Jupiter.

[https://en.wikipedia.org/wiki/Aldebaran]